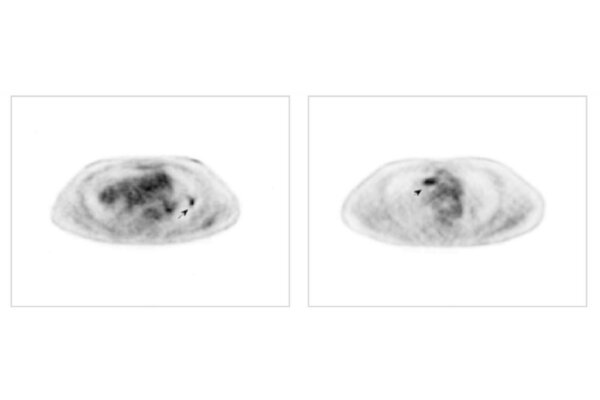

Amid headlines about artificial intelligence’s implications for everything from education to the future of work itself, Abhinav Jha, an assistant professor of biomedical engineering at the McKelvey School of Engineering and of radiology at the School of Medicine, both at Washington University in St. Louis, and his collaborators developed and evaluated two methods to quantitatively determine the realism of synthetic, or computer-generated medical images. The findings were published in the journal Physics in Medicine & Biology.

“We’re living in a remarkable moment where new artificial intelligence tools are generating tons of synthetic content, and a key emerging question is how to distinguish human-made from AI-generated content,” Abhinav Jha observed. “For example, with things like ChatGPT, we need to be able to figure out what text is human authored. It’s the same with images, including medical images. We have AI generating medical images, and we need to be able to separate real from fake.”

In the paper, Jha and his collaborators, who included physicians, physicists and computational imaging scientists, present two observer-study-based approaches for evaluating the realism of synthetic images: one using a theoretical ideal observer and the other using expert human observers with the aid of an online platform. This tool, which is publicly available through MIR, is already being used by international collaborators in the medical imaging community.

“The big question is how do we validate the accuracy of a given image?” said collaborator Barry Siegel, MD, professor of radiology and medicine at the School of Medicine and MIR. “We need to accurately distinguish real from fake to make sure we’re only using real images for diagnostics.”

But not all fakes are bad. If scientists can create synthetic medical images that look real enough, those images can be used to conduct virtual clinical trials. Virtual trials can help imaging scientists and physicians develop new imaging techniques while saving time, money and patient exposures. These trials are especially helpful for applications in rare diseases where scientists don’t have enough real cases to perform reliable evaluations of novel imaging methods.

“Our methods to validate synthetic images help overcome that challenge and put us that much closer to virtual imaging trials, which will allow us to explore new imaging methods without the time, expense and patient risks associated with traditional medical trials,” Siegel said.

“Another application of this method is in the context of training AI algorithms,” Jha said. “Using AI for medical imaging is tricky since we typically don’t have enough real images to train algorithms. Use of synthetic images provides a way to address this issue, but studies have shown that such images should be realistic. Our tool could be used to assess this realism.”

Jha notes that computer-based trials will require representative and realistic patient populations, accurate scanner models and realistic “virtual doctors” to read and interpret images. His and other groups are working on all three components to make virtual medical trials a reality.

More information:

Ziping Liu et al, Observer-study-based approaches to quantitatively evaluate the realism of synthetic medical images, Physics in Medicine & Biology (2023). DOI: 10.1088/1361-6560/acc0ce

Journal information:

Physics in Medicine and Biology

Source: Read Full Article