When Plato set out to define what made a human a human, he settled on two primary characteristics: We do not have feathers, and we are bipedal (walking upright on two legs). Plato’s characterization may not encompass all of what identifies a human, but his reduction of an object to its fundamental characteristics provides an example of a technique known as principal component analysis.

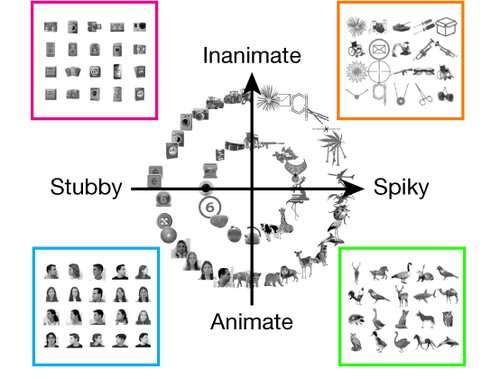

Now, Caltech researchers have combined tools from machine learning and neuroscience to discover that the brain uses a mathematical system to organize visual objects according to their principal components. The work shows that the brain contains a two-dimensional map of cells representing different objects. The location of each cell in this map is determined by the principal components (or features) of its preferred objects; for example, cells that respond to round, curvy objects like faces and apples are grouped together, while cells that respond to spiky objects like helicopters or chairs form another group.

The research was conducted in the laboratory of Doris Tsao (BS ’96), professor of biology, director of the Tianqiao and Chrissy Chen Center for Systems Neuroscience and holder of its leadership chair, and Howard Hughes Medical Institute Investigator. A paper describing the study appears in the journal Nature on June 3.

“For the past 15 years, our lab has been studying a peculiar network in the primate brain’s temporal lobe that is specialized for processing faces. We called this network the ‘face patch network.’ From the very beginning, there was a question of whether understanding this face network would teach us anything about the general problem of how we recognize objects. I always dreamed it would, and now this has been vindicated in a startling way. It turns out that the face patch network has multiple siblings, which together form an orderly map of object space. So, face patches are one piece of a much bigger puzzle, and we can now begin to see how the entire puzzle is put together,” says Tsao.

The brain’s inferotemporal (IT) cortex is a critical center for the recognition of objects. Different regions or “patches” within the IT cortex encode for the recognition of different things. In 2003, Tsao and her collaborators discovered that there are six face patches; there are also patches that encode for bodies, scenes, and colors. But these well-studied islands only make up some of IT cortex, and the functions of the brain cells located in between them have not been well understood.

Pinglei Bao, a postdoctoral scholar in the Tsao laboratory, wanted to understand these unknown regions of the IT cortex. Working with nonhuman primates, Bao first stimulated a region of IT cortex that did not belong to any of the previously defined patches and measured how other parts of IT responded to stimulation using functional magnetic resonance imaging (fMRI). In doing so, he discovered a new network: three regions of the IT cortex that were driven by the stimulation. He called this network the “no man’s land network,” since it belonged to an uncharted region of IT cortex.

To determine what kind of objects the new network responded to, Bao showed the primates images of thousands of different objects while he measured neurons’ activity in the new network. He found that the neurons responded strongly to a group of objects that seemingly had nothing in common, except for one curious feature: they all contained thin “protrusions.” That is, spiky objects such as spiders, helicopters, and chairs triggered the activity of the cells of the new network. Round, smooth objects like faces triggered almost no activity in this network.

Bao set out to mathematically describe what these objects all had in common. While a person can qualitatively describe the fundamental visible characteristics that make the shape of a chair distinct from a face, they cannot break those characteristics down to their mathematical parameters. To do that, Bao used a type of machine learning program called a deep network, which is trained to classify images of objects.

Bao took the set of thousands of images he had shown the primates and passed them through a deep network. He then examined the activations of units found in the eight different layers of the deep network. Because there are thousands of units in each layer, it was difficult to discern any patterns to their firing. Bao decided to use principal component analysis to determine the fundamental parameters driving activity changes in each layer of the network. In one of the layers, Bao noticed something oddly familiar: one of the principal components was strongly activated by spiky objects, such as spiders and helicopters, and was suppressed by faces. This precisely matched the object preferences of the cells Bao had recorded from earlier in the no man’s land network.

What could account for this coincidence? One idea was that IT cortex might actually be organized as a map of object space, with x- and y-dimensions determined by the top two principal components computed from the deep network. This idea would predict the existence of face, body, and no man’s land regions, since their preferred objects each fall neatly into different quadrants of the object space computed from the deep network. But one quadrant had no known counterpart in the brain: stubby objects, like radios or cups.

Bao decided to show primates images of objects belonging to this “missing” quadrant as he monitored the activity of their IT cortexes. Astonishingly, he found a network of cortical regions that did respond only to stubby objects, as predicted by the model. This means the deep network had successfully predicted the existence of a previously unknown set of brain regions.

Why was each quadrant represented by a network of multiple regions? Earlier, Tsao’s lab had found that different face patches throughout IT cortex encode an increasingly abstract representation of faces. Bao found that the two networks he had discovered showed this same property: cells in more anterior regions of the brain responded to objects across different angles, while cells in more posterior regions responded to objects only at specific angles. This shows that the temporal lobe contains multiple copies of the map of object space, each more abstract than the preceding.

Finally, the team was curious how complete the map was. They measured the brain activity from each of the four networks comprising the map as the primates viewed images of objects and then decoded the brain signals to determine what the primates had been looking at. The model was able to accurately reconstruct the images viewed by the primates.

“We now know which features are important for object recognition,” says Bao. “The similarity between the important features observed in both biological visual systems and deep networks suggests the two systems might share a similar computational mechanism for object recognition. Indeed, this is the first time, to my knowledge, that a deep network has made a prediction about a feature of the brain that was not known before and turned out to be true. I think we are very close to figuring out the how the primate brain solves the object recognition problem.”

Source: Read Full Article