To navigate successfully in an environment, you need to continuously track the speed and direction of your head, even in the dark. Researchers at the Sainsbury Wellcome Center at UCL have discovered how individual and networks of cells in an area of the brain called the retrosplenial cortex encode this angular head motion in mice to enable navigation both during the day and at night.

“When you sit on a moving train, the world passes your window at the speed of the motion of the carriage, but objects in the external world are also moving around relative to one another. One of the main aims of our lab is to understand how the brain uses external and internal information to tell the difference between allocentric and egocentric-based motion. This paper is the first step in helping us understand whether individual cells actually have access to both self-motion and, when available, the resultant external visual motion signals” said Troy Margrie, Associate Director of the Sainsbury Wellcome Center and corresponding author on the paper.

In the study, published today in Neuron, the SWC researchers found that the retrosplenial cortex uses vestibular signals to encode the speed and direction of the head. However, when the lights are on, the coding of head motion is significantly more accurate.

“When the lights are on, visual landmarks are available to better estimate your own speed (at which your head is moving). If you can’t very reliably encode your head turning speed, then you very quickly lose your sense of direction. This might explain why, particularly in novel environments, we become much worse at navigating once the lights are turned out,” said Troy Margrie.

To understand how the brain enables navigation with and without visual cues, the researchers recorded from neurons across all layers in the retrosplenial cortex as the animals were free to roam around a large arena. This enabled the neuroscientists to identify neurons in the brain called angular head velocity (AHV) cells, which track the speed and direction of the head.

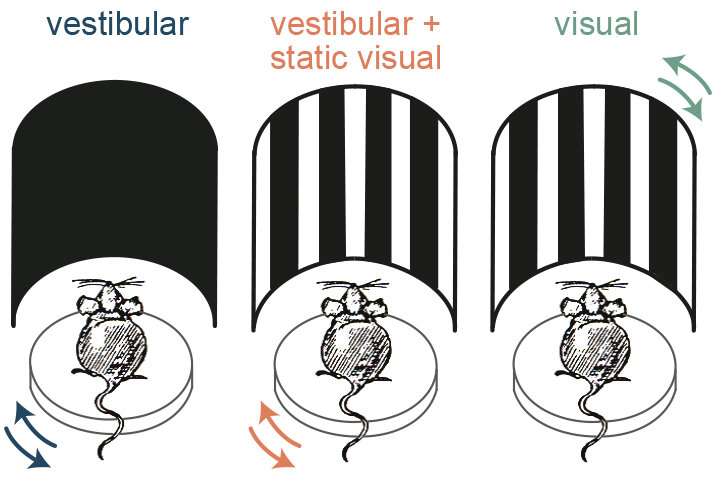

Sepiedeh Keshavarzi, Senior Research Fellow in the Margrie Lab, and lead author on the paper, also then recorded from these same AHV neurons during head-fixed conditions to allow the removal of specific sensory/motor information. By comparing very precise angular head rotations in the dark and in the presence of a visual cue (vertical gratings), with the results of the freely-moving condition, Sepiedeh was able to determine the while vestibular inputs alone can generate head angular velocity signals, their sensitivity to head motion speed is vastly improved when visual information is available.

“While it was already known that the retrosplenial cortex is involved in the encoding of spatial orientation and self-motion guided navigation, this study allowed us to look at integration at both a network and cellular level. We showed that a single cell can see both kinds of signals: vestibular and visual. What was also critically important was the development of a behavioral task that enabled us to determine that mice improve their estimation of their own head angular speed when a visual cue is present. It’s pretty compelling that both the coding of head motion and the mouse’s estimates of their motion speed both significantly improve when visual cues are available,” commented Troy Margrie.

Source: Read Full Article